The robots.txt file plays a big role in SEO. It contains directives for search engines such as Google and Yahoo and tells them which of your website pages to include or skip in their search results.

If you want better control of crawl requests to your site, you can edit your robots.txt file.

By default robots.txt file allows bots to search all of your site’s pages. Once hidden from the search engines through your site’s Editor, pages are still hidden from search engines.

Editing your Robots.txt file

To edit your robots.txt file:

1. Go to Settings from the left-hand sidebar of the Site Editor:

2. Hover over the “Search Engines” and activate the setting by clicking the mouse:

3. Hover over the “Robots.txt” and activate the setting by clicking the mouse:

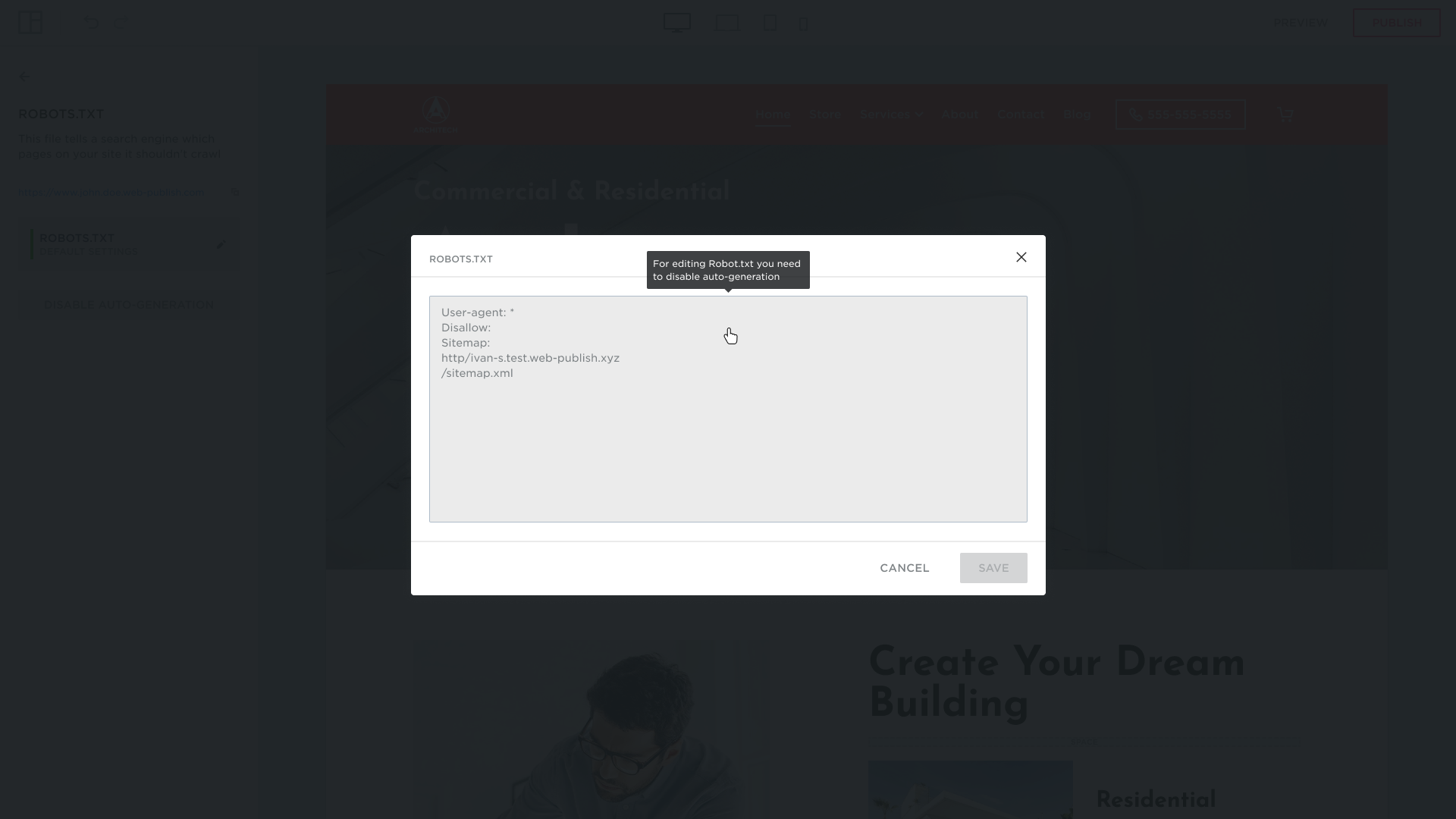

4. You need to disable auto-generation in order to be able to edit the Robots.txt file. To do this, click the “Disable auto-generation” button:

5. Confirm your decision by clicking Yes on the popup:

6. You can now edit the file by clicking the edit icon that appears on the right:

7. Click Save when finished editing:

Reset Robots file by default

To reset the Robots file to default settings, you need to click the “Reset to default” button in edit mode:

or enable auto-generation of the file.

Enabling auto-generation of the Robots file

1. To enable auto-generation of the Robots file, click the “Enable auto-generation” button, after which it will return to the default.

2. Confirm the popup by clicking Yes:

If auto-generation of the Robots file is enabled, the Robots file can’t be edited:

For editing the Robot.txt file you need to disable auto-generation.